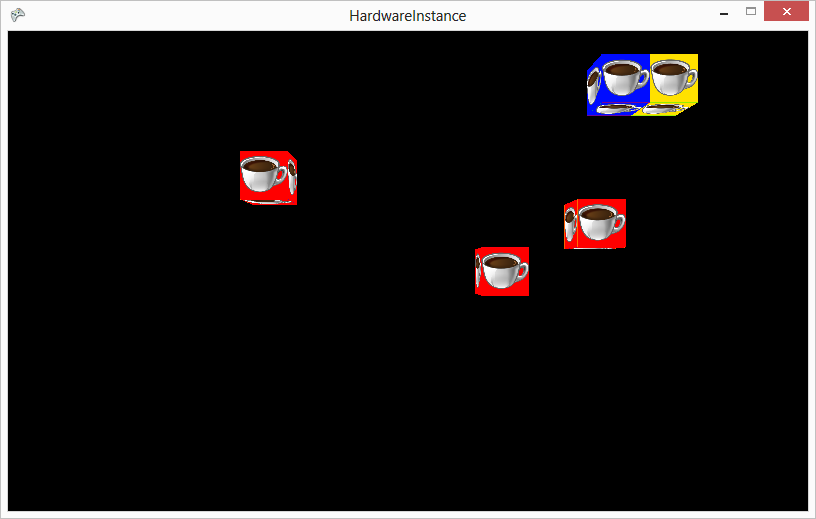

After finding the hardware instancing example for XNA, I started working on making a library out of the code so that I could use it in my game. This wasn’t too difficult, because I just had create a ModelDisplayer class and added methods to pass the GraphicsDevice, data array (block locations), model, bones, and camera information. Finally, I added a Draw method which displays blocks according to the positions in the data array, using a DynamicVertexBuffer which accepts the Matrix coordinates in its SetData method. The code is flexible enough to accept any 2D array of row and column positions for the blocks.

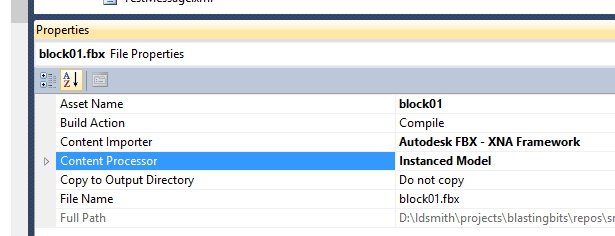

After building my new library, I would able to add a reference to it in my BlastingBits game and set my block to set the Instanced Model processor.

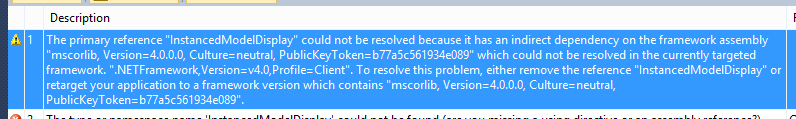

As with the SkinnedModelProcessor, it is necessary to build the library for both Windows and XBox 360. Otherwise, it will return an obscure framework warning which will prevent the library from being used.

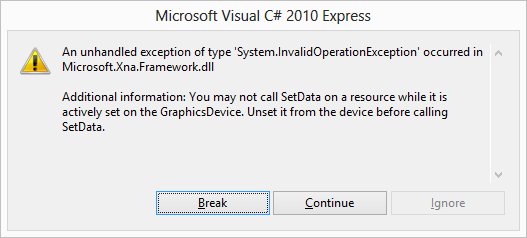

The new InstancedModel library seemed to work great in my initial tests, but I found two problems. First of all, only on the XBox 360 it would randomly throw an error on the SetData method. I don’t understand all of the technical details, but I did deduce that this error only occurred because I was calling the DrawModelHardwareInstancing method multiple times in my main Draw method. This was basically running the instance display code three times, once for the blocks in the current room and again for the blocks in the room to the left and right. Removing the call to display blocks in the adjacent rooms made the error go away on the XBox 360. Therefore, I combined the block arrays of all three rooms into one array, and then just called the hardware instance draw method only once.

This seemed to work well, but it would not display my own block and texture when I imported my FBX model and PNG texture file. In the example, it uses an FBX model, but I could never find the associated texture. My only guess is that the texture is somehow built into the FBX file. However, I’ve never seen an example of how to load a packed texture from an FBX file generated by Blender, so I’ve always loaded the texture file separately into the Content project. Therefore, I was stuck with the example block model which had a texture of a cat mapped to it which is unchangeable.

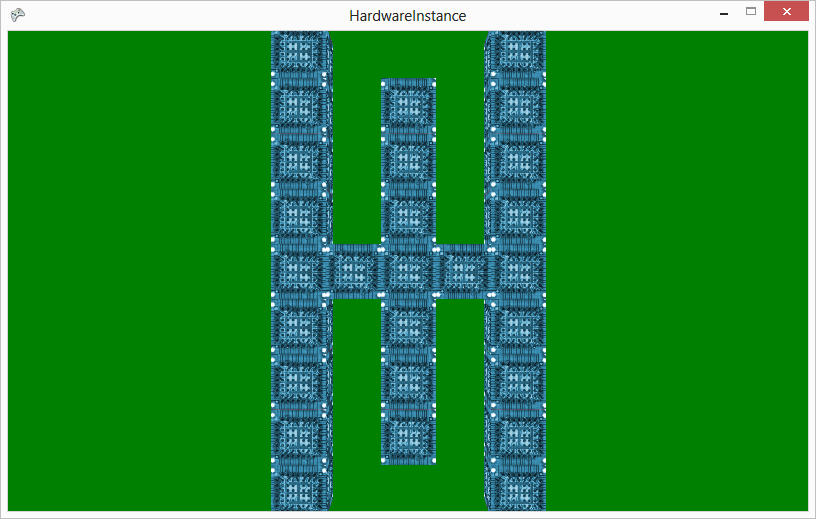

Fortunately, I found a really great article at float 4×4 (no author listed) that explains a similar hardware instancing process called “texture atlas”. This is even better than the previous code, because it allows textures for a model to be changed at runtime, and it has all the benefits of hardware instancing to eliminate slowdown. It’s like palette swapping for a 3D model.

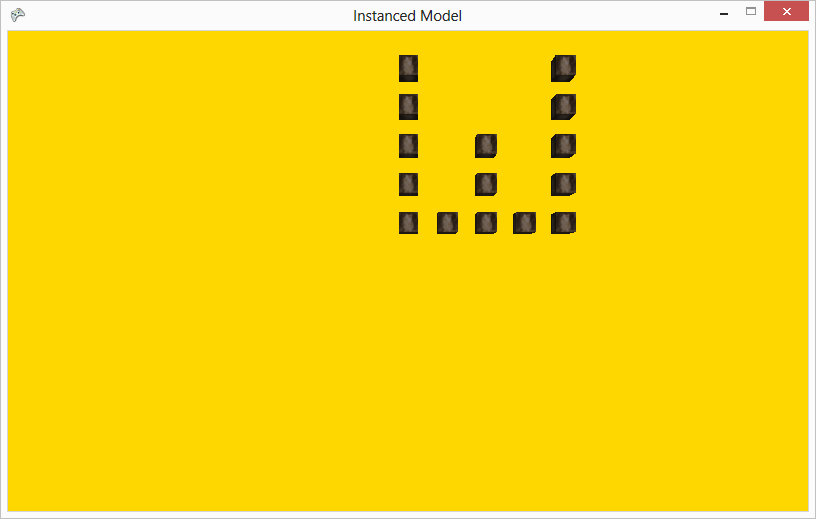

I modified the example code to eliminate the random zoom and spinning, so that it displays blocks at X/Y cells, similar to my game maps. It looks good, but it doesn’t do lighting so I will need to see if it is possible to add that.

As with the previous code, I extracted all of the code specific for rendering into its own library, so that I can link to it in the game. I only want to handle the loading of the textures in my main game code. This code does not import a model (just draws cubes), so there is no model processor. I manually updated the vertex location to make the blocks cubes with width, height, and depth of one (-0.5f to 0.5f) Similar to what I did the the previous example, I added a method which takes a 2D array as a parameter, so I can pass the block map to the AtlasDisplay to draw the blocks. The only thing I wasn’t able to include in the library is the FX Effect file, since it has to go into the content project. I’m not sure how to include that in the library, unless I create a second library just for that file, which is what I may do in the future.

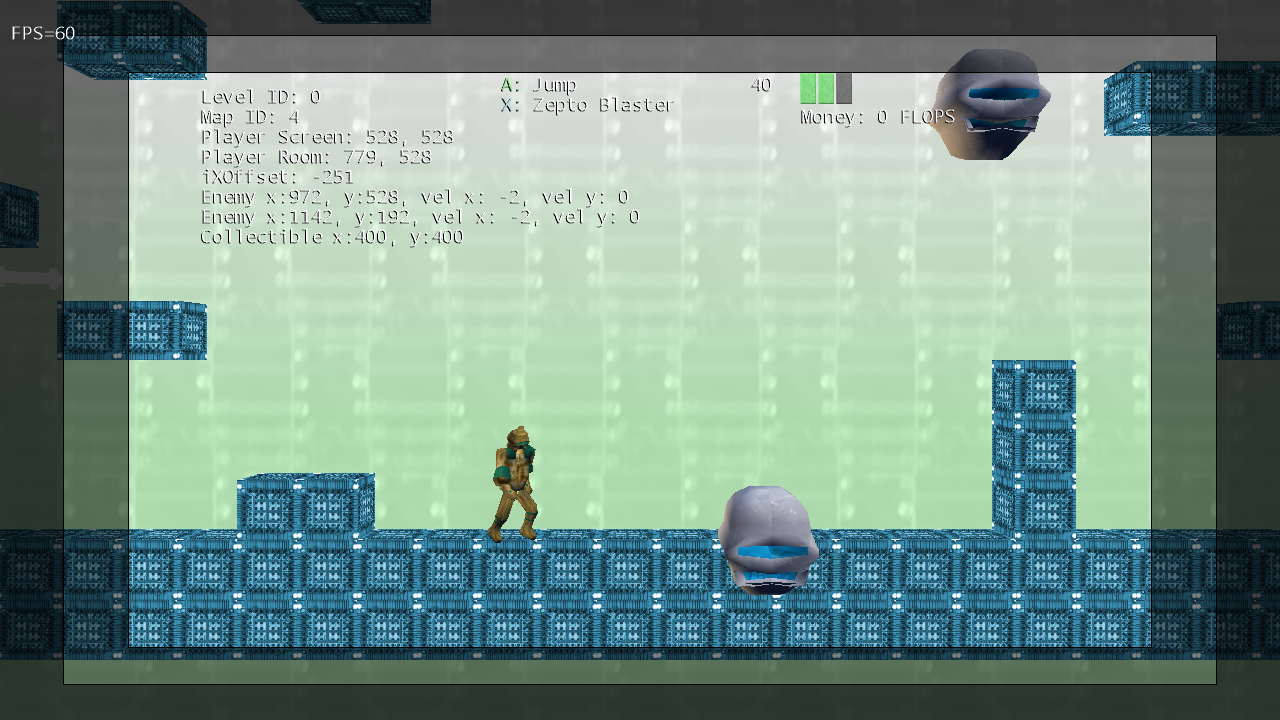

I setup the new TextureAtlas, Effect, and Texture2D in my main game, and passed the instance of the TextureAtlas to the GameScreen3D class. I setup all the necessary calls, and eventually I got the new block display using the texture atlas to work. It now renders the blocks for all three rooms, and I am getting a solid 60 FPS on the XBox 360. One thing to watch out for is that the code has a variable containing the number of blocks to render, which I currently have set to 1000. For three rooms, the current maximum number of blocks is 1170 (390 * 3), but most rooms will probably never have more than half of the blocks filled in the room array. Overall, the update to use the texture atlas method for hardware instancing was quite a bit of work for little noticeable change, but it was better to go ahead and solve the slowness issue now so that it isn’t a problem once I begin designing the levels.

Yeah, I was running through the same Microsoft Sample and was really perplexed for a few minutes on where the texture was being included into the model. I opened up the FBX file and looked through every line to see if the texture was baked into the model. It was not. I then noticed that the *Content Processor* for the model had been changed to a *custom* content processor, which referenced a separate content processor project in the solution. Once I looked at the content processor project, I immediately found the texture being added to the model during the content processing pipeline. It wasn’t obvious at first, or ideal for generalized use. Additionally, the project was rendering instanced models, not user generated primitives, and it didn’t properly explain the last parameter for each vertex element in the vertex declaration — that was a proper ass kicker which confused me! The Float4x4 article was perfect.